One of the biggest obstacles to widespread adoption of always-on AR – behind cost and size of hardware – is the lack of a suitable interface that is both convenient and unobtrusive. Many people are predicting that speech will become the next OS with the advent of AR, but do you really want to be calling out your shopping list – or worse - in public? Facebook has a more elegant – and ambitious – solution, as shared by Regina Dugan, VP of Engineering and Head of Building 8, at this week’s F8 developer conference. They want to read your mind.

Mind And Body, Digital And Physical

Dugan’s opening manifesto stated that Facebook’s goal is to create, “Products that recognise we are both mind and body. That our world is both digital and physical. That seek to connect us to the power and possibility of what’s new, while honouring the intimacy and richness of what’s timeless.”

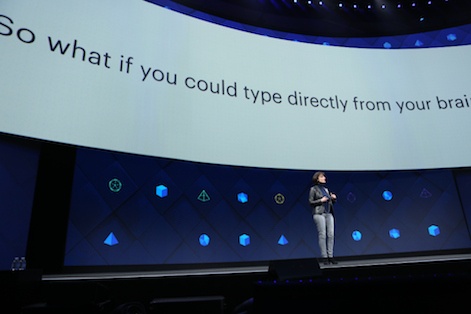

She explained that a human brain can compute 1TBps, but talking only conveys about 100Bps, prompting her to describe speech as, “A lossy compression algorithm”. So she asks the question, “What if you could type directly from your brain?” Privacy issues aside, as Dugan says, “It’s just the kind of fluid human-computer interface needed for AR”.

Future Now

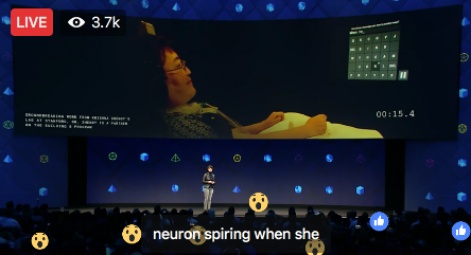

Dugan showed a video of a woman with ALS typing at eight words per minute via surgical implants. That may sound slow by secretarial standards, but this woman is typing using her mind alone. The project is targeting 100wpm.

The system that Dugan portrayed decodes speech rather than ‘random thoughts’. She drew the analogy that, “You take many photos; you choose to share some of them” – and thoughts are like that. Facebook’s silent speech interface would decode, “The words that you’ve decided to share by sending them to the speech centre of your brain”.

Non-Invasive Research

But not everybody is willing to go under the knife just to control their AR and there is no non-invasive technology to accomplish this today. Facebook has a team of more than 60 scientists and engineers working on the problem, starting with optical imaging and filtering for quasi-ballistic photons.

Communication Without Barriers

Dugan went on to open a philosophical can of semiotic worms by suggesting that this interface may be able to share thoughts independent of language, breaking down language barriers as effectively as Douglas Adams’ fictional Babel Fish. This could be a huge boon to global social VR. She also showed research into ‘hearing’ through actuators on your skin, so users can feel the acoustic shape of a word – invaluable for deaf/blind users.

Party Like It’s 1984

Anticipating accusations of creating an Orwellian nightmare of mind control and thought crime, Dugan asked, “Is it a little terrifying? Of course.” Her answer? “Because this matters.”

Dugan chose to close her presentation with three points that wouldn’t look out of place on a horror film poster:

- Be curious

- Be terrified

- Call your Mom