We were treated to a guerilla demo of indie project GNOSIS at VR Connects London earlier this year and it made a lasting impression on us. The way it presents information to the user – and how it allows them to navigate it – is unlike anything we’d seen before.

Here, lone developer Robert Bogucki recounts the journey of creating an award-nominated data-rich VR artefact borne out of a passion for graphics and data, and reflects on what it means to do VR technology justice.

I’m a marine hydrographer by trade and a VJ /creative coder by passion; those disciplines are not as unrelated as they might seem. Hydrographic surveying involves a mix of earth sciences, IT, multi-sensor data fusion (GPS, inertial, sonar) and data processing. Working with the huge sea floor mapping datasets for charting or subsea construction would simply not be possible to the scale and accuracy required today without the advances in graphics processors brought about by the games industry.

Growing up as a gamer, I was always fascinated by how hardware capabilities and limitations unleash creativity.

Growing up as a gamer, I was always fascinated by how hardware capabilities and limitations unleash creativity; architectures of graphics and sound chips bringing about new artistic styles. My passion for graphics led me to ocean mapping and an MSc at the University of New Hampshire, where I created an Augmented Reality system using the open-source Ogre3D game engine, ARToolKit and OpenCV libraries.

Fusion of datastreams also features heavily in typical set-ups used by interactive media artists for installations/live performances; these often quite complex systems interface software and hardware via MIDI, OSC protocols, the DMX standard and video texture sharing.

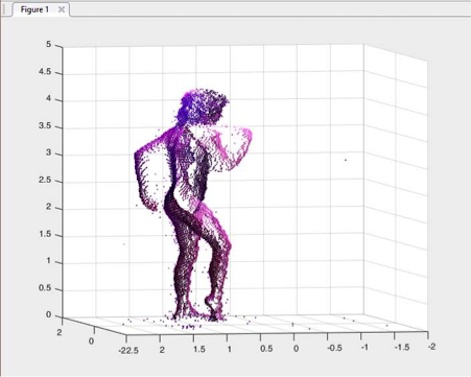

Hydrographers usually work five weeks on, five off – leaving me time to engage in passion projects, which included Machineparanoia using Ogre3D game engine, Resolume and Spout. We progressed this by adding a Kinect sensor to allow festivalgoers to mix their dance moves into the projected VJ-mix for a German installation. This led to using Kinects to capture dance performances which became the basis of the project I’ve been working on ever since...

Gnosis Launch Trailer from VJ Rybyk on Vimeo.

List of topics covered in this article:

- Unity And Unreal In Performative Art

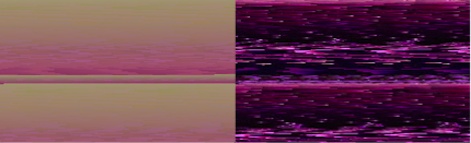

- Packing Pixels

- From Dance To Texture

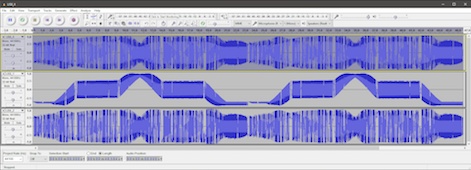

- Sound In Space

- Flying Solo

- Synthesis Of The Arts

- Doing VR Justice

Click here to view the list »